Securing the Black Box: OpenAI, Anthropic, and GDM Discuss

2024-05-06

2024-05-06

Human nature fears the unknown, and with the rapid progress of AI, concerns naturally arise. Uncanny robocalls, data breaches, and misinformation floods are among the worries. But what about security in the era of large language models?

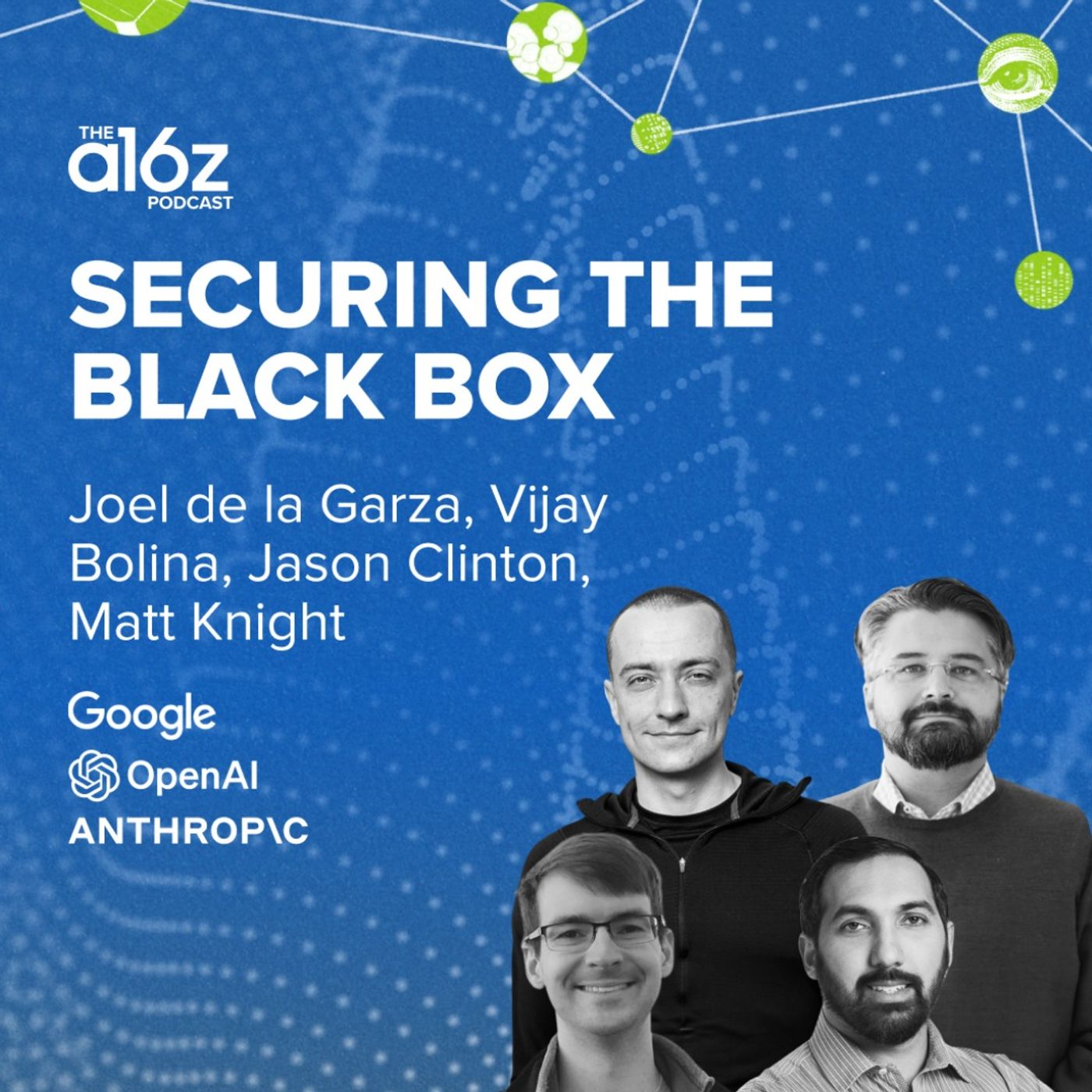

In this episode, we hear from security leaders at OpenAI, Anthropic, and Google DeepMind. Matt Knight, Head of Security at OpenAI, Jason Clinton, CISO at Anthropic, and Vijay Bolina, CISO at Google DeepMind, are joined by Joel de la Garza, operating partner at a16z and former chief security officer at Box and Citigroup.

Together, they explore how large language models impact security, including changes in offense and defense strategies, misuse by nation-state actors, prompt engineering, and more. In this changing environment, how do LLMs transform security dynamics? Let's uncover the answers.

Resources:

Find Joel on LinkedIn: https://www.linkedin.com/in/3448827723723234/

Find Vijay Bolina on Twitter: https://twitter.com/vijaybolina

Find Jason Clinton on Twitter: https://twitter.com/JasonDClinton

Find Matt Knight on Twitter: https://twitter.com/embeddedsec

Stay Updated:

Find a16z on Twitter: https://twitter.com/a16z

Find a16z on LinkedIn: https://www.linkedin.com/company/a16z

Subscribe on your favorite podcast app: https://a16z.simplecast.com/

Follow our host: https://twitter.com/stephsmithio

Please note that the content here is for informational purposes only; should NOT be taken as legal, business, tax, or investment advice or be used to evaluate any investment or security; and is not directed at any investors or potential investors in any a16z fund. a16z and its affiliates may maintain investments in the companies discussed. For more details please see a16z.com/disclosures.

More Episodes

2021-01-13

2021-01-13

2020-12-07

2020-12-07

2020-11-26

2020-11-26

2020-11-20

2020-11-20

2020-11-16

2020-11-16

2020-11-13

2020-11-13

2020-10-08

2020-10-08

Create your

podcast in

minutes

- Full-featured podcast site

- Unlimited storage and bandwidth

- Comprehensive podcast stats

- Distribute to Apple Podcasts, Spotify, and more

- Make money with your podcast

It is Free

- Privacy Policy

- Cookie Policy

- Terms of Use

- Consent Preferences

- Copyright © 2015-2024 Podbean.com